This has been great to see what others are doing with PowerShell Universal. Here are some of the things that we are doing with it today:

API

I have needed to create APIs that can be used by other systems for awhile. This allows me to create these APIs and fully manage their output. Here are some of the APIs I have created:

• Authoritative Address

o We have over 900 locations and have struggled in the past to have a single source of truth for our addresses. This API lets me pull from our authoritative source the correct address for a given unit and provide it to multiple different systems without them needing to store the address directly.

• Reporting

o Need to pull some data from SQL and provide it to a different vendor so that the information can be displayed in their system. This made it easy to generate the data for the report and pass it on via API.

Dashboards

AdminToolkit

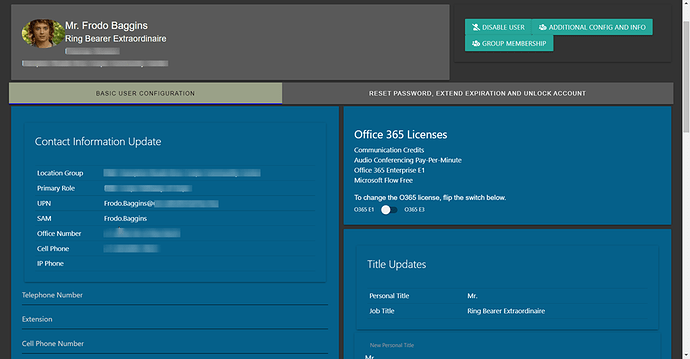

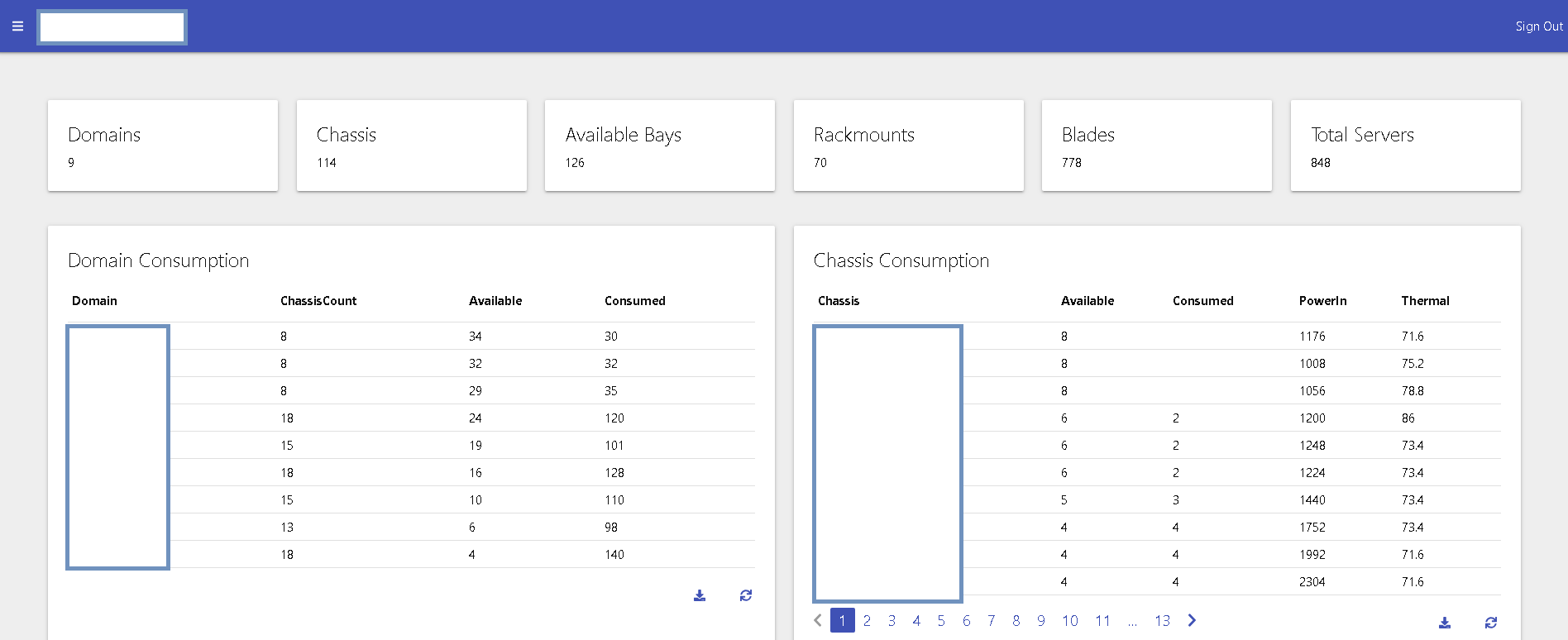

This is a large dashboard that is used by our techs. Rather than give our 80+ techs direct access to make changes, we use a proxy system to allow them to update information in Active Directory and Microsoft 365. (This is still on 2.9 dashboard, but I am working on upgrading it to PowerShell Universal.) To help with performance (as we have over 10,000 user accounts and tons of groups/Teams, computers, etc…), I have a cache built into this to do the initial lookup. I actually update the information in SQL as I gather information on each item that means generating it can take half hour or more. Then, I can easily update the cache in the dashboard by pulling in the SQL query in a matter of seconds.

In addition, most changes are done via a proxy system (where a request on their behalf is submitted to SQL and PowerShell then processes those requests. This allows me to do specific logging on each request, as well as maintain consistency so that changes made are done in the same way by everyone.

User Screens:

I do cache some of the information so that it responds faster. At times, I do show the last time that cache was refreshed

OneDrive info and expansion panels for other information

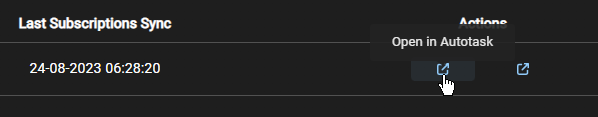

Group membership information that also shows if that membership has been synced to O365:

Add to a group:

In addition to managing users, we also manage groups.

Clicking on a group will let a tech see direct and indirect group membership and add users. The list of users that can be added is filtered based upon the group type and location to help prevent users being added to groups that they shouldn’t be added to.

For computers, if it is online, I make some WMI calls to get some live information about the computer, as well as pulling information from AD:

For shared mailboxes, I allow techs to see who currently has permissions on the shared mailbox and update that list of permissions. They can also see basic Exchange information about each mailbox.

For Microsoft Teams, I am able to display some information to a tech that might help them when they are assisting end users. This includes Team membership (owners, members and guests), channels on a Team, SharePoint site URL and email address.

App Inventory

I’ve created a site that has a list of the various applications in use across our locations. This allows our techs to look up applications that they may not be familiar with to find out more about it. It includes tier level support so they know who to escalate issues to.

I also have KB articles that can be easily added for reference, as well that are specific to a given application. I include information about the application including what servers it may be running on and the “owner” of the application.

In addition, I use this to keep track of spending on each application (whether it is license renewal or software development). This allows me to know the lifetime expense spent on a product, how much we spend on a given vendor and keep track of invoices.

Manage My Groups

This is a dashboard that I have created to allow our end users to manage specific group membership. This is to get around clunky native methods in Exchange by filtering the lists to only the groups they can manage membership and using the same user/group cache information to only add appropriate people to a group.

Address Lookup

Using my authoritative address API, they can look up addresses and verify it through various systems.

In addition, I have tied to a 3rd party API to standardize and validate addresses to make sure that they actually exist.